This is the fourth installment of describing a radically more productive development and delivery environment.

The first part is here: Intro. In the previous parts I described the big picture and the Vagrant and EC2 bootstrap.

Node initialization

The previous parts described getting Vagrant and EC2 to have an operational node. For Vagrant it leverages ‘host’ virtual disk access to configure and bootstrap itself. For EC2, it leverages CloudFormation to configure and bootstrap itself. In both cases the very last thing the node does in the bootstrap is:

1 2 3 | |

It is an ‘include/source’ to make sure it is at the same level as the initial bootstrap script. For EC2 this affects logging, so continual sourcing is preferred. In other cases, the ‘source’ enables sub-scripts to set values for subsequent scripts where subshells are more isolated.

init.sh

The init script first figures out where it is and sets up some important paths.

1 2 3 4 5 6 7 8 9 | |

cron_1m.sh

It then gets some AWS resources, sets up a shared ‘cron’, and so on. I like a single ‘cron’ job running every minute so it is easy to understand what is going on. This is the ‘heartbeat’ of the server configuration infrastructure: a server can want to change any ‘minute’. They look every minute for something that makes them want to change and then they launch an activity. The look need to be fast: take about a second or two per ‘look’ and not cause much load. But the ‘change’ does not have to be fast: it could take minutes to reconfigure based on the change. So while changing, the ‘looking’ is disabled. For example, deploying a new WAR can take a while. The server stops looking for new WARs when deploying a WAR. Then starts looking again when it is back online.

At scale (say 100 servers) with servers all on NTP this one-minute rhythm can cause resource rushing. To counter that we need to ‘jitter’ the servers so they work on a different second of the minute, or even as much as minutes later at super-scale (1000 servers). That is done within the cron_1m.sh script after the look has established something needs to be done.

1 2 3 4 5 6 7 8 9 | |

More specific initialization

The above activities are done for any node. They all need to have heartbeats and some other common resources. But beyond that, it depends on the type of node and the type of stack what should be put on a particular node. This is done by simple ‘includes’ with the ‘nodeinfo’ that came from the configuration.

1 2 3 | |

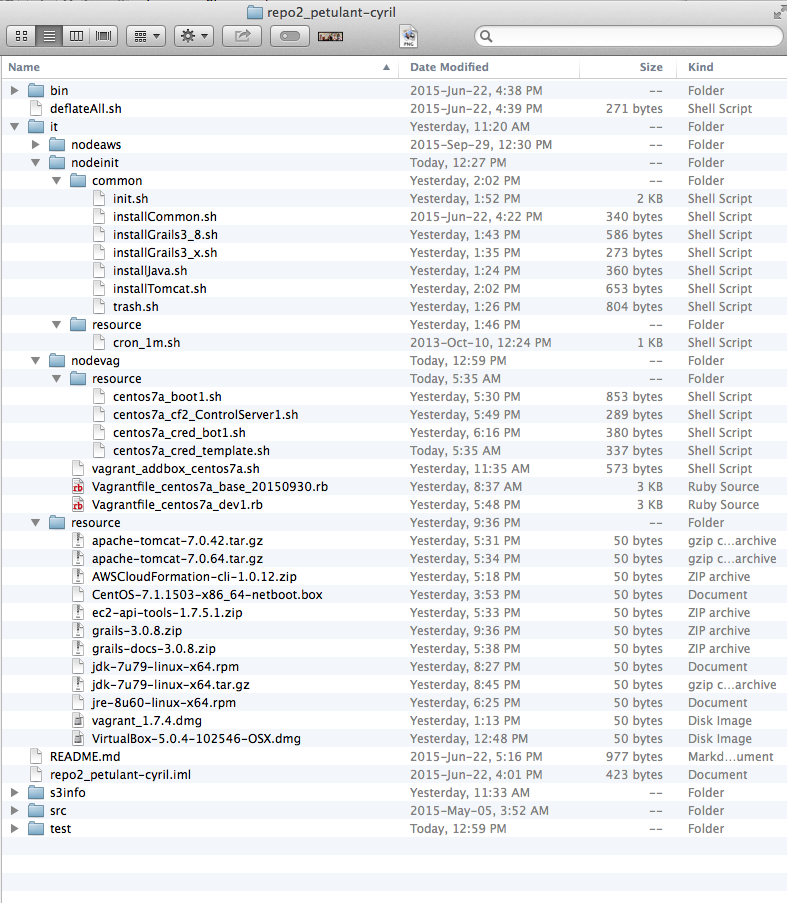

You can see the layout in the directory picture.

As of that picture, no ‘part’ or ‘stacktype’ exists. So a machine that is brought up is simply a heart-beating server, but a heart-beating server that can mutate on command every minute.

What are nodes doing every minute?

The next cool feature of ADD is that nodes do work based on the state of git repositories. For any given repository, they look for a ‘work.sh’ file within a ‘nodework’ directory for either all types of nodes (i.e. common again), or the specific type of node they are. So just like the other ‘include’ we get:

1 2 3 4 | |

where the only change is these are not ‘sourced’ but executed within a sub-shell since they could do weird things to each other, and also this enables them not to block each other (if desired).

All of these ‘work’ scripts should quickly determine if anything has changed and then release themselves. While ‘work’ is going on, the main cron script is locked out.

1 2 | |

work.sh

The main purpose of ‘work.sh’ is to detect changes. Any actual work will be in ‘work_ActualWork.sh’. In reverse, the ActualWork is simply:

1

| |

So a one-liner appears in the log for the cron job just to prove the ‘ActualWork’ was done.

But ‘work.sh’ has to do a few things (very quickly) to detect if there are changes of relevance. It stores files in the ‘repo/.temp/add’ directory that keeps track of state. The example ‘work.sh’ will detect changes to the repository based on a watched ‘path’. This allows multiple things to use the same git repository but be looking at different parts. By default they look at the root, but it can be changed. No matter what ‘path’ is watched, the version of the ‘work’ is always the version of the git repository… not the path itself. In total, there are four ‘outer’ states possible:

- The version of the work previously done is identical to the version of git now

- The version of the work previously done is different from the version of git now, but the version of the watched path is the same

- The version of the work previously done is different from the version of git now, and the watched path has changed

- There is no work previously done (the first run of the work)

Of the above, only the last two should trigger work. You can branch differently based on the first run or subsequent runs, but generally it is best to be ‘idempotent’ with the work: you change the state of the server to a new state without caring what the previous state is/was.

The ‘inner’ state issue is the server could already be doing ‘ActualWork’, so you have to wait until that is done.

The core of the work.sh script is

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 | |

Speed!

How fast does this detection take? Basically one second for it to figure out which of the variations it is in, plus the time of the ‘git pull’. With a ‘small’ server and a small change, this a single second and basically no load:

Difference detected: start the work

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

Difference detected (but already doing something)

1 2 3 4 5 6 7 8 9 10 11 | |

No Difference

1 2 3 4 5 6 7 8 9 10 | |